I vibe coded three features for my python packages and projects

While at PGConf.EU, I was inspired to modify Render-Engine (my static-site generator) to support loading pages stored from a Database. I also saw that Claude had recently announced skills and that plus the confidence in ai by my good friend Jeff, I wanted to see if I could improve my vibe coding, (actually more Planned AI Development) skills and I decided that I would commit to using AI heavier than usual in my coding.

If you're reading this, it means that I successfully implemented all the features and it's live now.

The Features

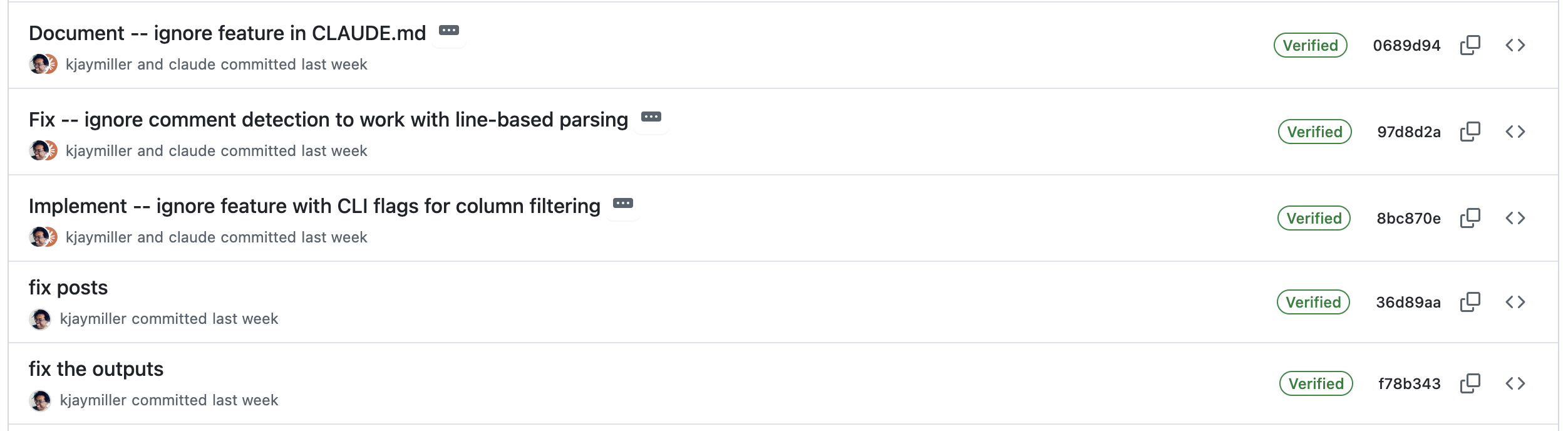

There were 3 experiments that I tested AI with. The first was next steps to make my pg parser compatible with Render Engine, updating the ContentManager and the PageParser. This was mostly done at the Schipol Airport in Amsterdam, with me finishing the implementation plan just as my boarding group was finalizing. Once I got home, I implemented the plan, fixed the bugs and I was once again reading and inserting content from/to the database.

Once that was working I built a Text User Interface (TUI) to allow me to manage and create blog posts. This was built with textual and would be just for my site but be the inpiration for a better version to live with the pg-parser.

Then, I wanted to create a cli that would look at a PostgreSQL schema file and create read and insert queries to use. This was built mostly with a render-engine agent that I created that was instructed to look at all the plugins, and websites, and documentation.

Lastly I wanted to modify the ContentManager and Parser to search for queries in pyproject.toml and use them. This allow for a quasi flexible approach to adapt to the collections.

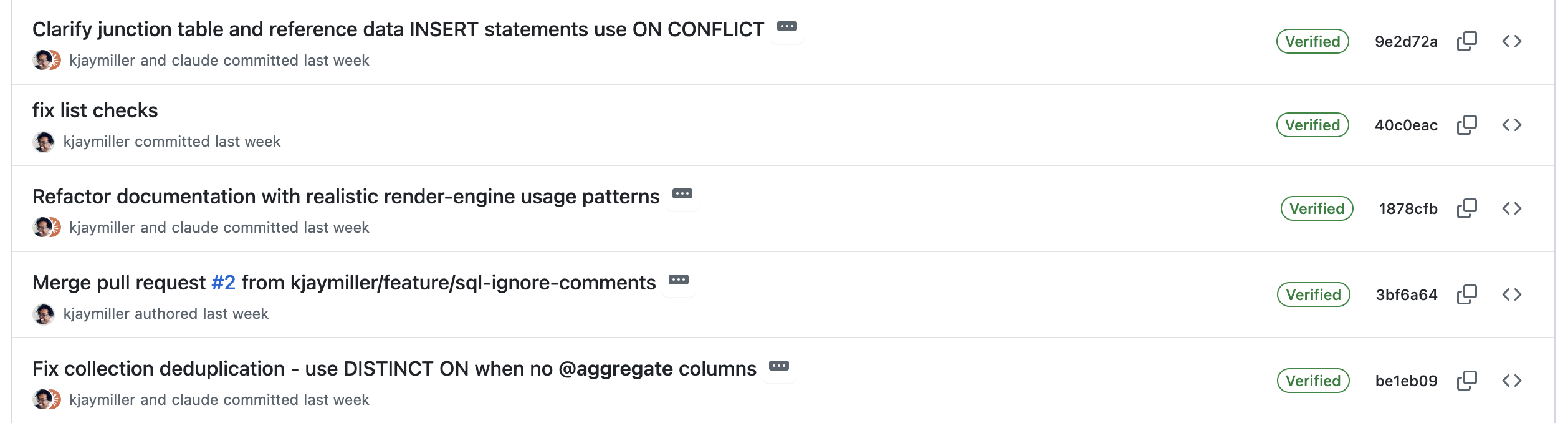

Here are the queries used for my blog.

[tool.render-engine.pg.insert_sql]

blog = [

"INSERT INTO tags (name) VALUES ({name}) ON CONFLICT (name) DO UPDATE SET name = EXCLUDED.name RETURNING id;",

"INSERT INTO blog (slug, title, content, description, external_link, image_url, date) VALUES ({slug}, {title}, {content}, {description}, {external_link}, {image_url}, {date});",

"INSERT INTO blog_tags (blog_id, tag_id, created_at) VALUES ({blog_id}, {tag_id}, {created_at});",

]

[tool.render-engine.pg.read_sql]

blog = "SELECT blog.id, blog.slug, blog.title, blog.content, blog.description, blog.external_link, blog.image_url, blog.date, array_agg(DISTINCT tags.name) as tags_names FROM blog LEFT JOIN blog_tags ON blog.id = blog_tags.blog_id LEFT JOIN tags ON blog_tags.tag_id = tags.id GROUP BY blog.id, blog.slug, blog.title, blog.content, blog.description, blog.external_link, blog.image_url, blog.date ORDER BY blog.date DESC;"

The fact that you are reading this means that everything worked!

Learning 1: Context and prework make the process better..kinda

Perhaps this is a thing that we should be doing when developing anyway, but the accuracy of the LLM is predicated by the amount of planning and guidance you give it. The initial development plan allowed me to look at code before it was implemented and look at how my prompt was interpreted in which I could make small adjustments and ask followup questions like "how are you going to test this implementation" or "how does this plan keep in line with (important policy I put in place to keep things on the rails)".

With Claude Code, it feels almost a requirement to run '/init' on any project first and let it analyze your code. More importantly, make sure you have an agents meant to be the experts. I gained trust in my project after I made my render-engine agent to build the plan based on the docs, and code of other render-engine parsers and sites that use it. Any time that it would suggest a feature I could immediately challenge it with "how would I use this" and it would, almost, give me the right usage.

That's the problem. It still doesn't work perfectly, that said, the things that were wrong were obvious enough that I could challenge it. It's annoying that AI seems to never be correct, but perhaps if I add more context.

That's the other problem. The amount of context is incredibly annoying for one off projects. Like, if you're developing a feature or small project, trying to prepare your AI is incredibly annoying in how much work you have to put into it. That said, I think there is some corporate value in having humans help keep the context of agents that will be used across an org, like what they announced at GitHub Universe this year.

Learning 2: Add tests

This was huge. Of course it's important to have tests... but what I've noticed is that by forcing Claude to follow a more TDD approach based on the plan, it keeps hallucinations limited to the scope of new features. It also ensures that when code needs to be rewritten it will check against all the tests.

Also when a test fails, you can say WITHOUT CHANGING THE TESTS... Make them pass.

Learning 3: Early Decisions cause problems down the line

The issues that I come across are often in the initial decision making process. This is like a snowball. The earlier you correct bad decisions, the easier they will be to fix, but if you wait too long there will be so much context that it will be a harder/more challenging fix.

This is primarily why I like the idea of creating an initial implementation spec that outlines:

- the implementation plan

- how it will be integrated with other tools

- what challenges will be faced

This is great because it's often easier to read and update before you have your AI make lots of changes that will be harder to following down the line... You can also include the content in a PR.

Learning 4: Knowledge Saves Time

I'm not the most knowledgeable around PostgreSQL querying. I say that in saying that sometimes I don't know what I need to make sure that the query is doing.

In the most obvious case in this experiment, I didn't think about making sure that my queries were distinct based on id. This meant that I I would get multiple entries based on each tag. I also wasn't thinking about how I would update content or handle errors.

This danger of AI has been covered fairly well. In fact this is the number one issue we get from now contributors to projects (including Render-Engine). Devs will see something marked good first issue and, instead of using it to get familiar with the code base, will have their LLM tackle it. When our maintainers kindly suggest a change, they devs are not willing to do the work as they have moved onto the next good first issue.

I've mentioned this before, but I have used AI to handle the things that I'm familiar with and allow me to focus on the things that I'm hoping to learn or develop stronger skills in. My rule of thumb will always be. I'm not going to have AI write for me what I don't know. (It can show me how to write it, but I need to be the one doing the writing of it)

Overall Takeaways:

Development speed is one of those things that is attributed to developer skill. I mean, these companies believe that AI will be doing the coding for us and that will increase productivity. But I don't think that saves as much time (if at all).

That said, it will force me to be more meticulous and it will make even my simplest projects more complete. When you can create a spec on how to write docs that help your future self. Also the fact that Claude gets instructions from a CLAUDE.md file and you can have a folder with agents that will help you use GH properly, Establish your Tests, Create Development Plans and Keep Jira up to date. I think the benefits should make development clearer for all involved.

That said the render-engine-pg-parser is in beta and will be released as stable when I'm able to better understand how the code integrates into the new changes that are being finished up in Render Engine (look for a 2025.11.1 release). I've also included my CLAUDE.md files and one of the agents in the projects as well if you want to look at them (in my personal site and in the parser)

Regard the future of vibe/spec coding, I think it will reduce the number of developers but this is a problem. Developers want to develop and having them write the code is going to always build more knowledgeable devs (both of your code-base and general development). I find it funny that the tips and tricks that make AI more reliable in coding is that of project management1. Currently purging middle management and asking our developers to become middle managers doesn't seem like the right response. We're giving them more protocol and less Python (or whatever your language is). I think this will be a non-starter for many professionals and they will continue to write their code with their coding assistants giving them [AI pause] until hopefully we feel less pressure from our employers to use these systems all the time.

-

This isn't me saying that we should replace the product managers either... they are going to be able to take these specs and guides and make them something that keeps developers on task and decision makers informed. ↩